Part 3: The Tool

Outline

In this part, we:

- Introduce our tool for assessing assessments based on the Chemistry Threshold Learning Outcomes (CTLOs).

- Take you through a couple of examples of assessments to highlight some of the key issues to look out for in designing assessments with the CTLOs in mind.

The Tool

If you cast your mind way, way back to the beginning of this workshop, you’ll recall that we introduced the idea of the Chemistry Threshold Learning Outcomes (CTLOs), but we didn’t go into detail. You can find them here!

After you've had a look, let’s take note of a couple of things:

- Firstly, there are five threshold learning outcomes, each with between two and five parts. A given assessment task doesn’t need to cover all five! In fact, as we’ll see in a moment, it might be difficult for a task to even cover more than one.

- Secondly, the CTLOs are relatively agnostic to complexity. This means we can deal with CTLOs at any level (from the developing through to the graduate), which is going to be important when it comes to our assessments.

The basic premise of the tool is this:

This means that an assessment task that claims to assess a part of a CTLO must not only credit the part, but also require it to pass. In evaluating an assessment task, we thus move through two questions:

- Task design: At what level does the task engage with a CTLO?

- Assessment criteria: Which elements are required to pass, and at what level of complexity?

1. Task design

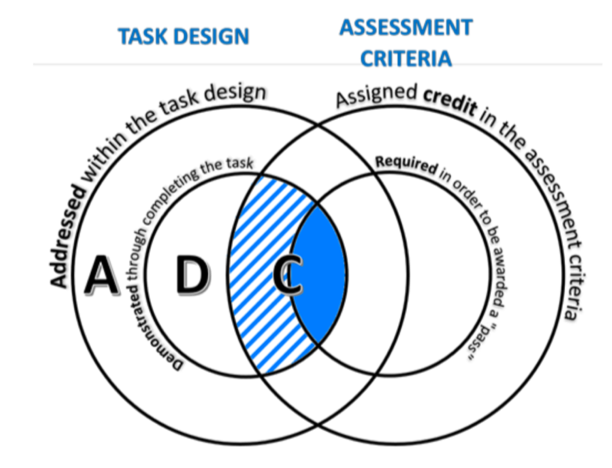

We divide engagement into three levels: Addressed (A), Demonstrated (D), and Credited (C). The increase correlates with the weight attributed to the CTLO, from mention through to allocation of marks.

For a task to address an outcome, it merely needs to make reference to the outcome. At this level, students are exposed to any part of the TLO in any way, either implicitly or explicitly, to any level of achievement through completion of the task as instructed.

At a deeper level of engagement, a task might require the demonstration of an outcome. In this case, students are directly instructed to demonstrate or evidence their attainment of any parts of the TLO, to any level of achievement, through completion of the task as instructed. Note that ‘demonstrated’ does NOT apply if:

- The task is structured as a learning experience in the TLO, not an evaluation of capacity.

- Evidence of student understanding of the TLO is not required to be submitted, despite relevance to the task.

- Students’ capabilities with respect to the TLO are unable to be discerned, regardless of exposure to the TLO.

Finally, and ideally, a task that credits an outcome assigns some affirmation of attainment to it. These can be, for example, ‘marks’, ‘grades’, compulsory ‘hurdles’ that are dependent on students providing clear evidence of achievement with respect to the TLO, within their submitted work. ‘Credited’ does NOT apply if:

- Demonstration of the TLO is absent from the assessment criteria and is not presented as a compulsory hurdle.

- Assessment criteria are too vague or non-specific to be clearly said to include the TLO.

- Assessment criteria have poor (or no) connection to student capability with respect to the TLO.

As an example, let’s consider a mock assessment task on black body radiation that is designed to target only CTLO 1,

1. Understand ways of scientific thinking by:

1.1 recognising the creative endeavour involved in acquiring knowledge, and the testable and contestable nature of the principles of chemistry

1.2 recognising that chemistry plays an essential role in society and underpins many industrial, technological and medical advances

1.3 understanding and being able to articulate aspects of the place and importance of chemistry in the local and global community

There is a myriad of ways we might design this assessment—the CTLO is intentionally broad. Here are just a few that we might think of, classified according to their level of engagement with the outcome:

| Assessment description | Level of engagement with CTLO 1 |

|---|---|

| Students are given data and required to plot a graph of blackbody radiation under the Rayleigh-Jeans law and Planck’s law, that is, with and without the UV catastrophe. | This addresses parts of CTLO 1 because this is a historically important transition in scientific understanding, but students are not actually required to demonstrate any understanding of this context. |

| Students are required to research or derive the Rayleigh-Jeans law and Planck’s law for blackbody radiation, and plot graphs of both at high and low temperatures, but marks are only awarded for the plots. | This demonstrates parts of CTLO 1 because it appears that a pass-level student has evidenced their understanding of the historical transition by completing the graph. |

| Students are required to research/derive the Rayleigh-Jeans law and Planck’s law for blackbody radiation, are awarded marks for the derivation and an explanation of the difference between and implications of both, and plot graphs of both at high and low temperatures. In addition, they provide a short introduction that describes the historical change in thinking and the implications of the shift for scientific and general communities. | In this case, parts of CTLO 1 are credited because there is a grade or mark associated with the successful demonstration of the outcome. |

2. Assessment criteria

Even if a task credits a CTLO, it doesn’t accomplish the fundamental goals of the Threshold Learning Outcomes project—in order for the claim of CTLOs to mean anything, assessments must credit the CTLO to the extent that a student would fail if they did not adequately display their mastery of the requirement.

The obvious way to do that would be to assign enough weight to the CTLO in the marking criteria to achieve the pass requirement. But this might not be the best way; after all, you can really only assess one CTLO per assessment if you need to allot half the marks to one.

The main alternative is to structure assessments such that sufficient demonstration of a CTLO (or a selection of them) is a hurdle to passing the assessment or course. There may only be a few marks associated with the CTLO in the final grade, but a student would fail if they did not successfully demonstrate that CTLO at the requisite level.

The tool itself

A downloadable version of the tool can be found here. An online version is coming soon. To use the tool, simply download the Microsoft Excel file and answer the questions from the drop-down menus on the third sheet with respect to the assessment you are evaluating.

There are two keys to understanding the output of the evaluation. Firstly, this Venn diagram summarises the categories we’ve just described. The ideal assessment task sits at the centre:

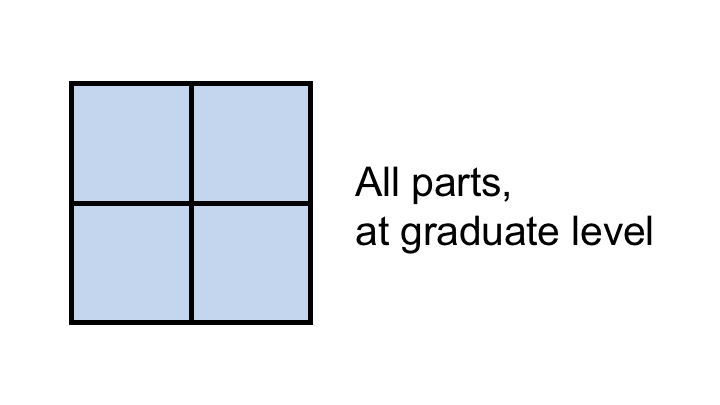

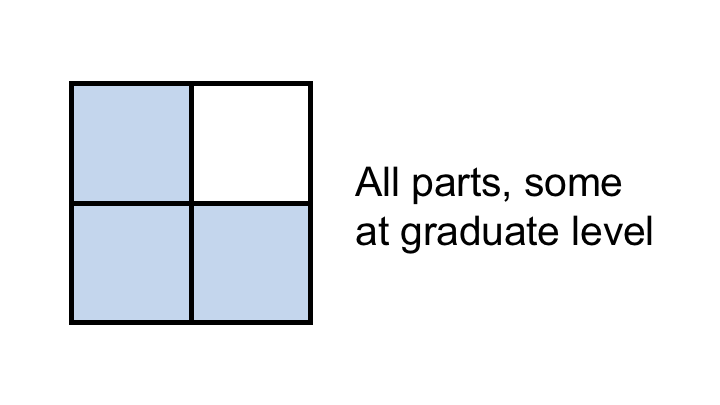

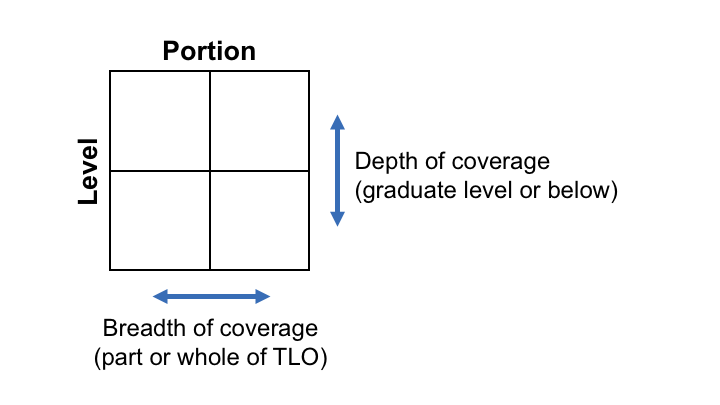

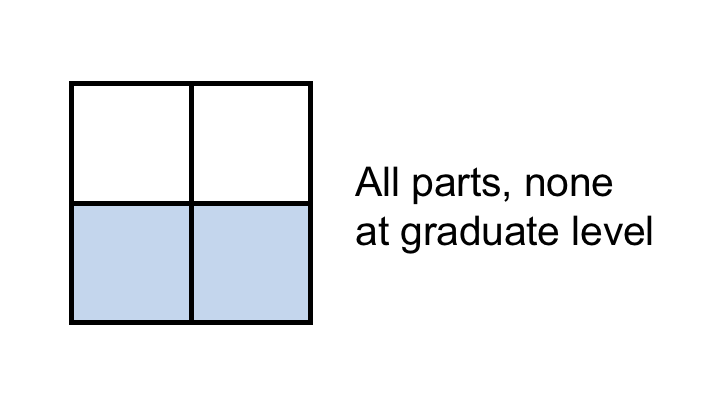

The full or partial colouring comes into play in the final output of the tool: when we assess a task or series of tasks for a particular CTLO, the output is a 2x2 grid that summarises the efficacy of the task:

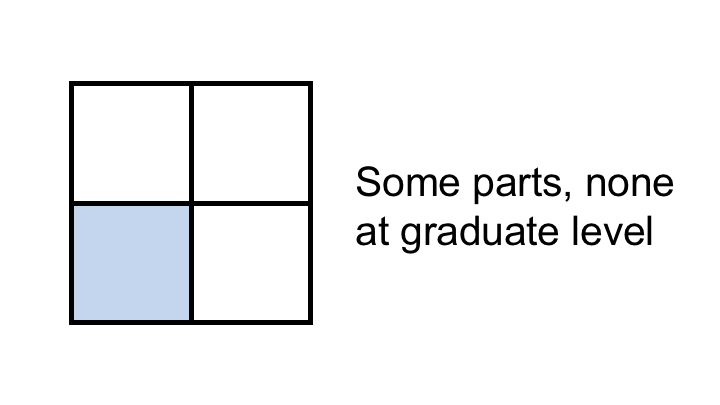

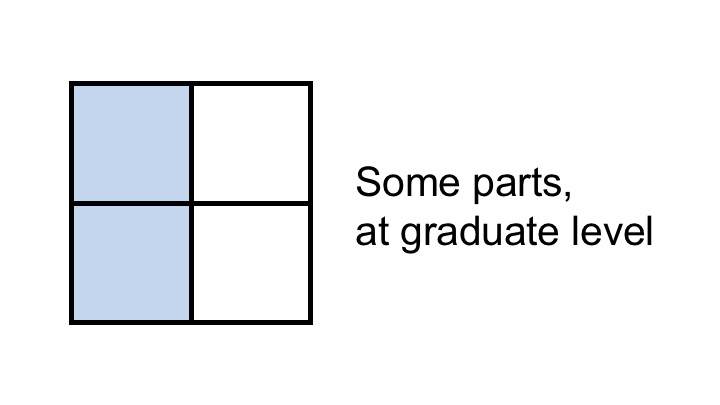

The way the grid is filled (along each axis, and with the full or partial colouring of the Venn diagram) tells us about how well the assessment treats a particular CTLO:

A couple of examples

Now that we've seen the tool and understand its components, we're going to take a deeper dive into some assessments to see how it's applied. We’ve already seen some examples of assessment tasks and their learning outcomes in this workshop, but we’re going to go through two to highlight some common mistakes (example 1) and how they can be avoided (example 2).

Case Study 1: Preparation and Investigation of Surfaces of Partially Wetting, Hydrophobic, and Superhydrophobic Surfaces.

For the sake of continuity and reinforcement, let’s return to our original assessment example: the lab task that required the preparation and analysis of different glass coatings.

Recall that students work in pairs to

- Prepare a set of superhydrophobic surfaces by coating small glass tiles with a series of silicon-based organic polymers.

- Record the contact angle of water droplets on each surface.

- Analyse the differences in hydrophobicity of the different surfaces.

- Evaluate the efficacy of the different coating methods.

And are assessed with a short (2 page) summary task. No grade is given for laboratory skills, except insofar as they are represented through the quality of results obtained.

The distribution of marks for the assessment is:

| Component | Weight (%) | Description |

|---|---|---|

| Aims | 5 | Succinct statement of purpose of experiment, including outline of relevance of techniques used. |

| Methods | 5 | Succinct description of method in the correct tense and voice, containing sufficient detail for the experiment to repeated by another researcher, but free of unnecessary information. Clear description of operation of experimental and surface modification techniques used. |

| Results | 30 | (photos of contact angles) Photos of droplets clearly show the key differences, and include appropriate figure caption(s) below the photos with corresponding contact angle for each image. Values of contact angles clearly presented in an appropriate table or figure, to the appropriate number of significant figures, including uncertainty. The nature of the uncertainty is described. |

| Discussion | 30 | The significance of the results (in the context of the entire project) are linked to the aims. The role of surface chemistry and structure modification on wettability is concisley summarised along with key results. Potential further research is proposed based on insight gained from results. |

| Conclusion | 10 | Well explained, comprehensive and critical analysis of the effect of nature and size of intermolecular interactions, with reference to Young's equation, the role of surface structure, and their effect in all systems studied. Insight into the nature of surface coatings used, potential variations, and their effects on wettability experiments. |

| Formatting | 15 | Neat, organised with headings, with very few spelling/grammatical errors. Report of an appropriate length. Correct use of chemical notation, including symbols, units, significant figures and uncertainty. |

| References | 5 | References appropriate and format consistent. Callouts to tables and figures appropriate. |

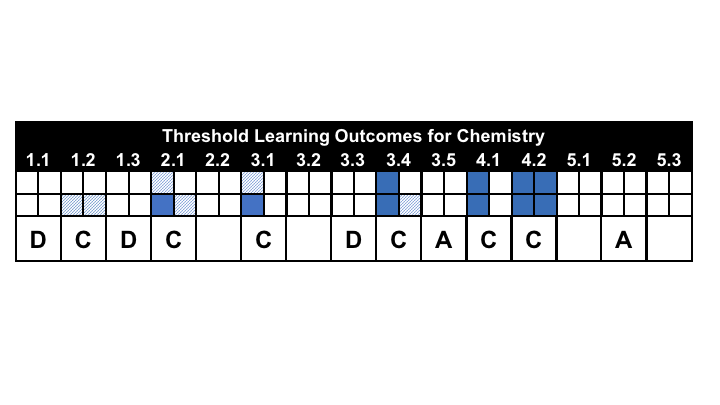

And, using our tool, the assessment is evaluated as:

In particular, note that CTLO 3.3 (“Applying recognised methods and appropriate practical techniques and tools, and being able to adapt these techniques when necessary”) is only demonstrated—even though the task is an assessment of a laboratory, and this CTLO is implicit in the assessment structure, it’s not explicitly credited at all.

CTLO 3.5 is a similar case: even though the lab work is conducted in pairs, the assessment does not even require demonstration of any teamwork.

Though this task has some wins, in attempting to cover many outcomes, it fails to cover almost any effectively. The next case study provides an excellent alternative to structuring the laboratory and project assessment.

Case Study 2: Team-Based Analytical Lab Report

In this third-year ‘capstone’ project, which spans an entire semester-long subject, students work in teams to “plan, execute and report on an investigation of a complex authentic industrial ‘waste’ material (solid or liquid or combination) including background research into the nature of their sample and possible contaminants, and state regulations of disposal options.” The focus is on building teamwork skills, so that ‘teamwork’ constitutes 40% of the assessment (as an explicit component (20%), an oral project pitch (10%), and a presentation of project outcomes (10%)). The rest of a student’s grade comes from their laboratory practice and recordkeeping (20%) and a 30-40 page technical written report (40%).

More explicitly, the assessment rubric looks like this:

| Assessment | Weight (%) | Description |

|---|---|---|

| Laboratory performance | 20 | Individual: in-class observation and assessment of your actual laboratory practice, assessment of your laboratory notebook. |

| Individual contribution to team work. This assessment item is a hurdle component, requiring a minimum of 10/20 to pass the subject. |

20 | Individual, in four parts: - Individuals are assessed in lab for collaborative activities and practices that support their team members. - Regular contributions to online team discussion. - Online end of session peer and self-assessment of individual input to team communication and collaborative processes. - Individual students write two scaffolded self-reflection pieces on effective functioning in the team, one at beginning of session and one at end of week 13. Submitted to Moodle. |

| Week 4 Project Proposal | 5 | Team: Project proposal with introduction, methods and rationale; summary of planning to date; answers to set questions. Submitted via Moodle. |

| Week 9 Results Summary | 5 | Individual: This is your individual table of results summarising all team data to date. |

| Major Report This assessment item is a hurdle component, requiring a minimum of 20/40 to pass the subject. |

40 | Individual: Written as a technical report, 30-40 pages. |

| Team Presentation | 10 | Powerpoint or other team presentation to class. Assessed on content, communication skills and team work. |

What is interesting—and highly effective—is the inclusion of so-called hurdle requirements. Their inclusion makes fully crediting multiple CTLOs within a single unit possible, and easily so: students cannot pass unless they demonstrate associated CTLOs.

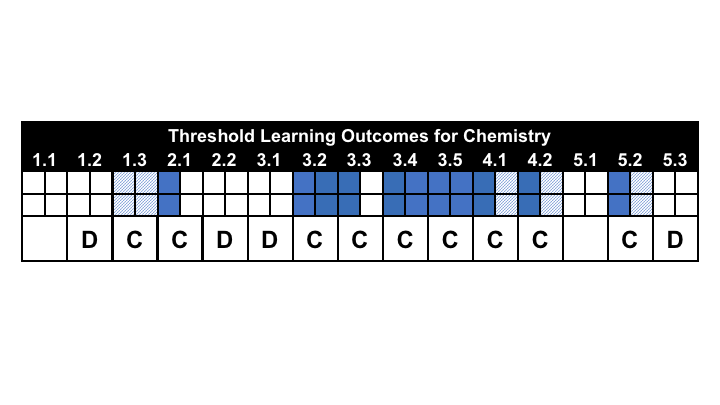

Because of the hurdle requirements, we’ve given this assessment scheme the following evaluation:

Note that three CTLOs are completely covered at a graduate level; some parts of four others are covered at a graduate level. This is remarkable! The hurdle requirements and simple focus of the assessment scheme made this possible—because the assessment tasks build on each other and cover an entire unit, they are able to do far more.

This assessment is also a great example of an effective application of the backwards design process. You can find out more about it here.

Summary

Let's summarise the key ideas:

- The core idea to the assessment tool is that in order to say a graduate has shown a particular CTLO at a graduate level, they need to have passed an assessment they would otherwise have failed, had they not successfully shown that part at the required level.

- There are three levels of engagement in a task's design. A task can address, demonstrate, or credit a CTLO, depending on the weight attributed to the outcome.

- In order for a CTLO to mean anything, the task needs to credit the CTLO to the extent that a student would fail if they did not adequately display their mastery of the outcome. This can be achieved by weighting an outcome enough, or by introducing hurdle requirements in the assessment.

In the next section, we wrap up the workshop and point you towards a collection of assessment exemplars we've collected, alongside their outcomes from the tool. Go to the conclusion here.

Unless otherwise noted, content on this site is licensed under the Creative Commons Attribution-ShareAlike 4.0 International License

Unless otherwise noted, content on this site is licensed under the Creative Commons Attribution-ShareAlike 4.0 International License